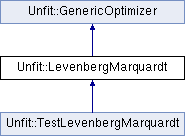

A class to implement the Levenberg Marquardt optimization method. More...

#include <LevenbergMarquardt.hpp>

Public Member Functions | |

| LevenbergMarquardt () | |

| virtual | ~LevenbergMarquardt () |

| int | FindMin (GenericCostFunction &cost_function, std::vector< double > &coordinates) override |

| Implements the Levenberd-Marquardt optimization method. More... | |

| void | Reset () override |

Public Member Functions inherited from Unfit::GenericOptimizer Public Member Functions inherited from Unfit::GenericOptimizer | |

| GenericOptimizer () | |

| virtual | ~GenericOptimizer () |

| void | ResetGenericOptimizer () |

| virtual double | GetCost (std::size_t index=0) const noexcept |

| virtual bool | GetIsPopulationBased () const noexcept |

| virtual std::size_t | GetNumberOfIterations () const noexcept |

| virtual std::size_t | GetNumberOfFunctionEvaluations () const noexcept |

| virtual std::vector< std::vector< double > > | GetPopulation () const |

| virtual std::vector< double > | GetSolution (std::size_t index=0) const |

| virtual void | SetPopulation (const std::vector< std::vector< double >> &population) |

Private Member Functions | |

| std::vector< double > | LoopUntilWithinBounds (const std::vector< double > &point, std::vector< double > &hlm) |

| std::vector< double > | CalculateResiduals (GenericCostFunction &cost_function, const std::vector< double > &coordinates) |

| void | FindJacobian (GenericCostFunction &cost_function, const std::vector< double > &residuals, const std::vector< double > &coordinates, bool one_sided_difference=true) |

| void | BroydenUpdate (const std::vector< double > &residuals_new, const std::vector< double > &residuals, const std::vector< double > &hlm, Matrix &jacobianT) |

Private Attributes | |

| Matrix | jacobian_ |

| std::size_t | dimensions_ |

| std::size_t | observation_size_ |

| double | stored_cost_ |

Friends | |

| class | TestLevenbergMarquardt |

Additional Inherited Members | |

Public Attributes inherited from Unfit::GenericOptimizer Public Attributes inherited from Unfit::GenericOptimizer | |

| Unfit::Bounds | bounds |

| Unfit::Options | options |

Protected Member Functions inherited from Unfit::GenericOptimizer Protected Member Functions inherited from Unfit::GenericOptimizer | |

| virtual bool | CalculateCost (GenericCostFunction &CostFunction, std::vector< double > &x) |

| void | GeneratePopulation (GenericCostFunction &CostFunction, std::size_t dimensions) |

| void | GenerateRandomEngines () |

| virtual bool | IsConverged (const std::vector< double > &best_member, std::size_t truncated_index=0) const |

| Checks to see if the population has converged. More... | |

| virtual void | PrintInitialOutput (double best_cost) const |

| virtual void | PrintIterationOutput (double best_cost) const |

| virtual void | PrintFinalOutput () const |

| virtual void | SortPopulation () noexcept |

Protected Attributes inherited from Unfit::GenericOptimizer Protected Attributes inherited from Unfit::GenericOptimizer | |

| std::vector< std::vector< double > > | population_ |

| std::vector< std::mt19937 > | random_engines_ |

| std::atomic< std::size_t > | function_evaluations_ |

| std::atomic< std::size_t > | iterations_ |

| bool | is_population_based_ |

Detailed Description

A class to implement the Levenberg Marquardt optimization method.

This class implements the Levenberg Marquardt optimization method. It requires a cost function (written by the user) and an initial guess of the unknown parameters. It then proceeds to try to find a set of parameters that minimize the cost function.

The inspiration for this implementation was derived from the book: Methods for Non-linear Least Squares Problems, by K. Madsen, H.B. Nielsen, O. Tingleff. At the time of writing, this was freely available online. There is also a sample matlab implementation on their website that was useful for getting the Broyden rank one updates to work.

Constructor & Destructor Documentation

◆ LevenbergMarquardt()

| Unfit::LevenbergMarquardt::LevenbergMarquardt | ( | ) |

The constructor sets all of the parameters to their defaults.

◆ ~LevenbergMarquardt()

|

inlinevirtual |

As we are deriving from this class, the destructor should be virtual. In this case an empty destructor is just fine as we are putting everything into std::vectors which take care of themselves.

Member Function Documentation

◆ BroydenUpdate()

|

private |

Rather than calculate the jacobian matrix via finite differences at each iteration, for expensive cost functions in particular, it is worth using Brodyen Rank One Updates. These given an estimate of the step size and do not require any function evaluations. See the text quoted in the class documentation for details.

- Parameters

-

residuals_new the residuals at the proposed parameter estimate residuals the residuals at the current parameter estimate hlm the step size between the current and new parameter estimates jacobianT the transpose of the jacobian matrix (updated)

◆ CalculateResiduals()

|

private |

Calculate the residuals (costs) associated with the the current estimate of the unknown parameters. NOTE that you should never call the cost function directly, call this method instead. This method also tracks the number of function evaluations.

- Parameters

-

cost_function the function to be used to calculate the residuals coordinates the estimate of the unknown parameters

- Returns

- a vector containing the residuals associated with the current coordinates

◆ FindJacobian()

|

private |

This method computes the first derivatives of the cost function with respect to each of the unknown parameters and stores the values in the jacobian matrix. Note that it is more efficient to store JT (J-transpose) so that is what is done here.

- Parameters

-

cost_function cost functions to calculate the residuals residuals a vector of residuals at the current guess. Only used for one-sided differencing; can be empty for two-sided differencing. coordinates vector of the coefficients of the function one_sided_difference select between one sided (forward) and two sided finite different approximations for the Jacobian calculation

◆ FindMin()

|

overridevirtual |

Implements the Levenberd-Marquardt optimization method.

This method takes in a cost function (user supplied) and an initial guess for the unknown parameters and uses the Levenberd-Marquardt algorithm to try to find the set of parameters that provides a minimum cost. This is implemented as a nonlinear least squares problem, so the cost will always be >= 0. This is known as a local optimizer, so the results you get will depend on how good the initial guess is.

Intended use: auto rc = object.FindMin(cost_function, coordinates)

- Parameters

-

cost_function residuals to be tested coordinates vector of initial guesses

- Returns

- 0 if successful

1 if empty coordinates are passed in

2 if the initial coordinates are out of bounds

3 if the the data_points < number of variables

4 if the maximum number of function evaluations was exceeded

5 if the maximum number of iterations has been exceeded

6 if the initial cost is not finite

7 if the solution breaks down (nu gets very large)

Implements Unfit::GenericOptimizer.

◆ LoopUntilWithinBounds()

|

private |

This method calculates the new coordinates based on the old coordinates and the step size, hlm. If the new coordinates fall within the bounds of the domain that is all it does. If the new coordinates are outside the bounds of the domain, the step size is iteratively reduced by a factor of two until it is inside the domain.

- Parameters

-

point current coordinates hlm step to be taken

- Returns

- the new coordinates (point + hlm)

◆ Reset()

|

overridevirtual |

Resets everything back to their default values. This is equivalent to destroying the object and creating a new one.

Implements Unfit::GenericOptimizer.

Friends And Related Function Documentation

◆ TestLevenbergMarquardt

|

friend |

For unit testing purposes only

Member Data Documentation

◆ dimensions_

|

private |

Number of parameters to find

◆ jacobian_

|

private |

Jacobian matrix

◆ observation_size_

|

private |

Number of experimental observations

◆ stored_cost_

|

private |

Variable to store the current cost of the solution

The documentation for this class was generated from the following files:

- include/LevenbergMarquardt.hpp

- src/LevenbergMarquardt.cpp

1.8.13

1.8.13